The recent partnership between Meta and Stanford’s Deliberative Democracy Lab to conduct a community forum on generative AI has shed light on the expectations and concerns of actual users regarding responsible AI development. With input from over 1,500 individuals from Brazil, Germany, Spain, and the United States, the forum focused on key issues and challenges in AI development. One notable finding is that the majority of participants from each country believe that AI has had a positive impact. Additionally, most participants agree that AI chatbots should be able to use past conversations to improve responses, as long as users are informed. There is also a consensus that AI chatbots can exhibit human-like traits, provided that users are aware of this.

Despite the overall positive outlook on AI, regional differences in perception were observed during the forum. Participants from different countries expressed varying levels of approval and skepticism towards certain aspects of AI development. This diversity of opinion highlights the complex nature of public perception towards AI and the need for tailored approaches in different regions. It is crucial to understand these regional nuances in order to address concerns and optimize AI development strategies accordingly.

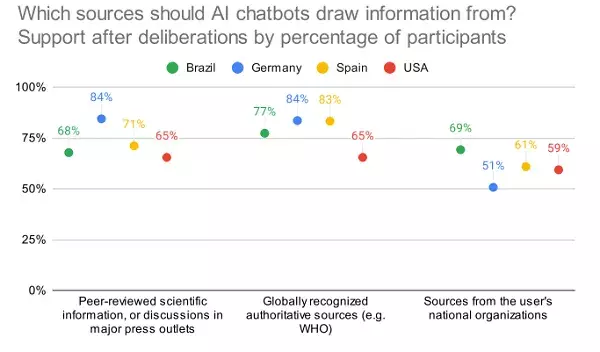

One of the ethical considerations raised in the forum is the issue of romantic relationships with AI chatbots. While this may seem unconventional, it underscores the evolving nature of human-AI interactions and the need for ethical guidelines in this area. Moreover, the study also revealed insights into AI disclosure and information sourcing, indicating a growing awareness of the importance of transparency and accountability in AI systems.

The study highlighted controversies surrounding the controls and biases inherent in AI tools. For instance, Google faced criticism for its Gemini system’s biased results, while Meta’s Llama model was accused of producing sanitized and politically correct depictions. These examples underscore the influence of AI models on the outcomes they generate, raising questions about corporate responsibility and the need for regulatory oversight. The debate over the extent of control corporations should have over AI tools and the necessity of broader regulations to ensure fairness and accuracy is ongoing and requires thoughtful consideration.

In light of the forum findings, it is evident that there is a pressing need for universal guardrails to protect users against misinformation and misleading responses generated by AI systems. While the full implications of AI tools are not yet fully understood, it is clear that proactive measures are necessary to uphold ethical standards and safeguard societal well-being. The ongoing discussion on the regulation of AI development is vital in shaping the future of AI technologies and ensuring their responsible and beneficial integration into society.