One of the key elements in optimizing the efficiency of the stochastic gradient descent (SGD) algorithm is the step size, also known as the learning rate. Various strategies have been developed to improve the performance of SGD by adjusting the step size. However, a common challenge in these strategies lies in the distribution of the step sizes throughout the iterations, which can impact the effectiveness of the algorithm.

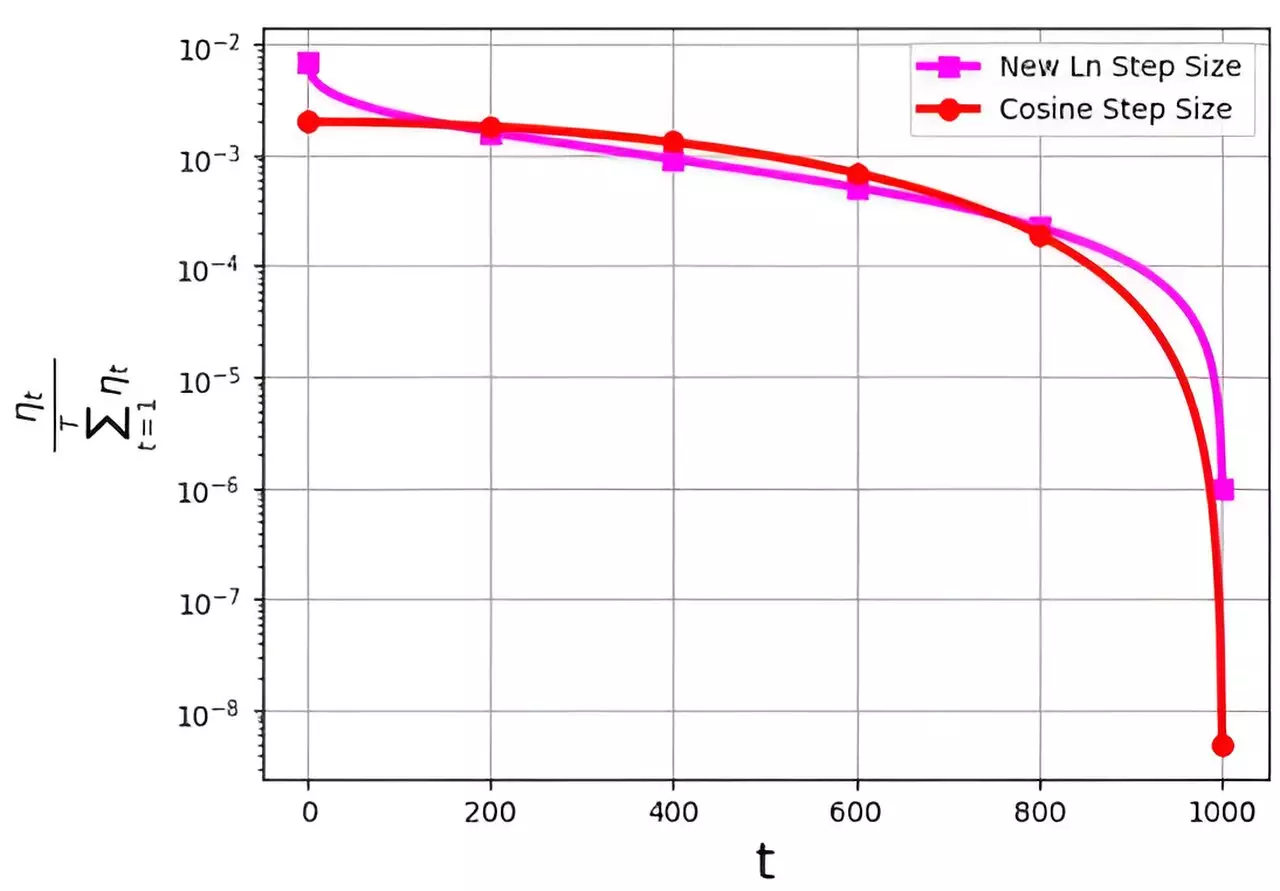

A recent study conducted by M. Soheil Shamaee and his team, published in Frontiers of Computer Science, introduced a new logarithmic step size approach for SGD. This new method has shown to be particularly beneficial in the final iterations of the algorithm, where it has a higher probability of selection compared to the traditional cosine step size. By addressing the issue of assigning very low values to the last iterations, the logarithmic step size outperforms the cosine step size in these critical stages, leading to improved performance.

The results obtained from the experiments conducted with the new logarithmic step size are promising. The study tested the algorithm on datasets such as FashionMinst, CIFAR10, and CIFAR100, showing significant improvements in test accuracy. Notably, when combined with a convolutional neural network (CNN) model, the logarithmic step size achieved a 0.9% increase in test accuracy for the CIFAR100 dataset.

Implications and Future Research

The introduction of the logarithmic step size for SGD opens up new possibilities for enhancing the efficiency and performance of the algorithm. The findings of this study have important implications for the field of machine learning and optimization. Future research could focus on further refining the step size strategies for SGD and exploring their applications in different contexts and datasets.

The step size in stochastic gradient descent optimization is a critical factor that can significantly impact the performance of the algorithm. The new logarithmic step size proposed by M. Soheil Shamaee and his team offers a promising approach to addressing the challenges associated with traditional step size strategies. By improving the distribution of step sizes throughout the iterations, the logarithmic step size shows great potential for advancing the field of machine learning and optimization.