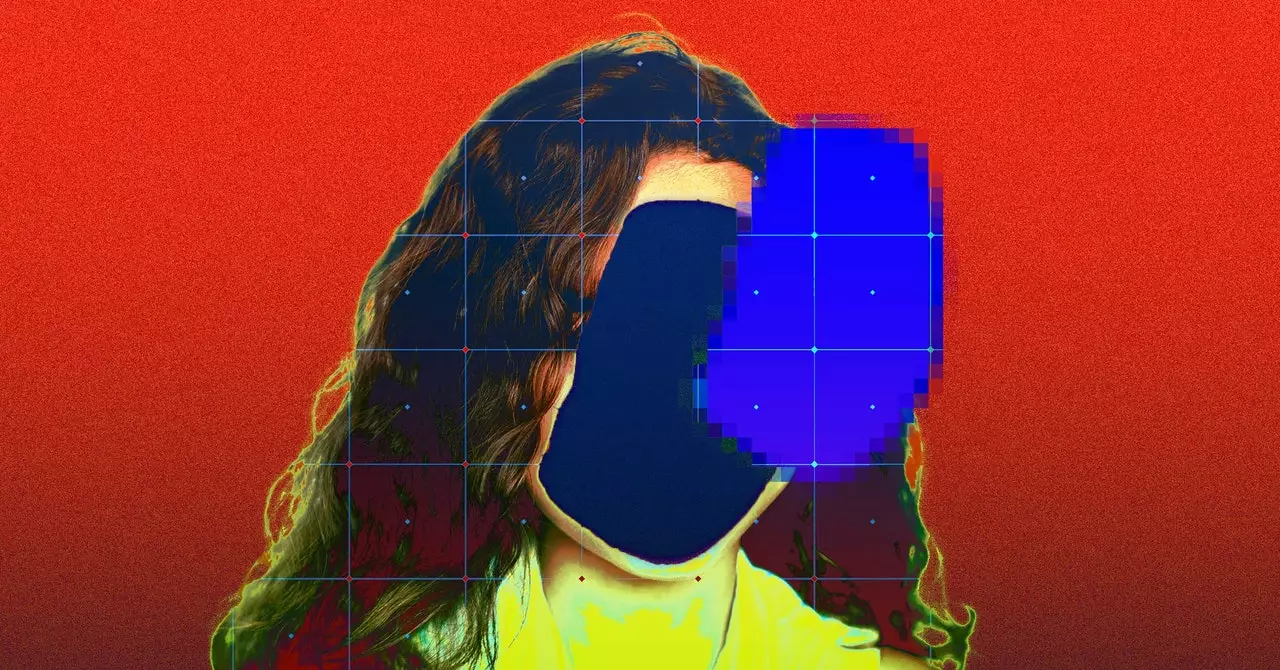

The recent report by Human Rights Watch sheds light on the disturbing practice of using images and personal details of children from Brazil without their knowledge or consent to train AI models. The images have been scraped from various sources, including mommy blogs, personal websites, and YouTube videos. This blatant violation of privacy raises serious ethical concerns regarding the use of children’s data for AI development.

The researcher at Human Rights Watch who discovered these images highlighted the potential risks involved in using children’s photos to train AI tools. Once these images are incorporated into datasets and used to develop AI models, they can be manipulated to create realistic imagery. This opens up the possibility of malicious actors using this technology to exploit and harm children who have any photo or video of themselves online.

The LAION-5B dataset, which contains over 5.85 billion pairs of images and captions, has become a popular source of training data for AI startups. However, the inclusion of children’s images without their consent or knowledge raises questions about the responsibility of dataset creators. LAION has taken some steps to address the issue, including collaborating with organizations to remove references to illegal content. Nevertheless, the ethical implications of using such datasets remain a pressing concern.

Both YouTube and LAION have faced criticism for allowing unauthorized scraping of content that violates their terms of service. The revelation that the AI training data collected by LAION-5B contained child sexual abuse material underscores the legal and moral implications of using unethically sourced data for AI development. The rise of explicit deepfakes in schools further highlights the urgent need to safeguard children’s privacy and protect them from potential exploitation.

Beyond the immediate violation of privacy, the use of children’s images in AI training datasets poses a broader threat of revealing sensitive information about them, including locations or medical data. The case of a US-based artist who found her own image in the LAION dataset, sourced from her private medical records, underscores the need for stricter regulations and safeguards to protect children’s privacy in the digital age.

The unethical practice of using children’s images without their consent for AI training not only violates their privacy but also exposes them to risks of exploitation and harm. It is essential for dataset creators, technology companies, and regulatory bodies to address these ethical concerns and prioritize the protection of children’s rights in the digital landscape.