Large language models (LLMs), such as the GPT-4 model, have revolutionized the field of conversational AI, raising questions about the extent to which these models can mimic human intelligence. Researchers at UC San Diego conducted a study to assess whether individuals can distinguish between responses generated by the GPT-4 model and those produced by humans using a modified Turing test.

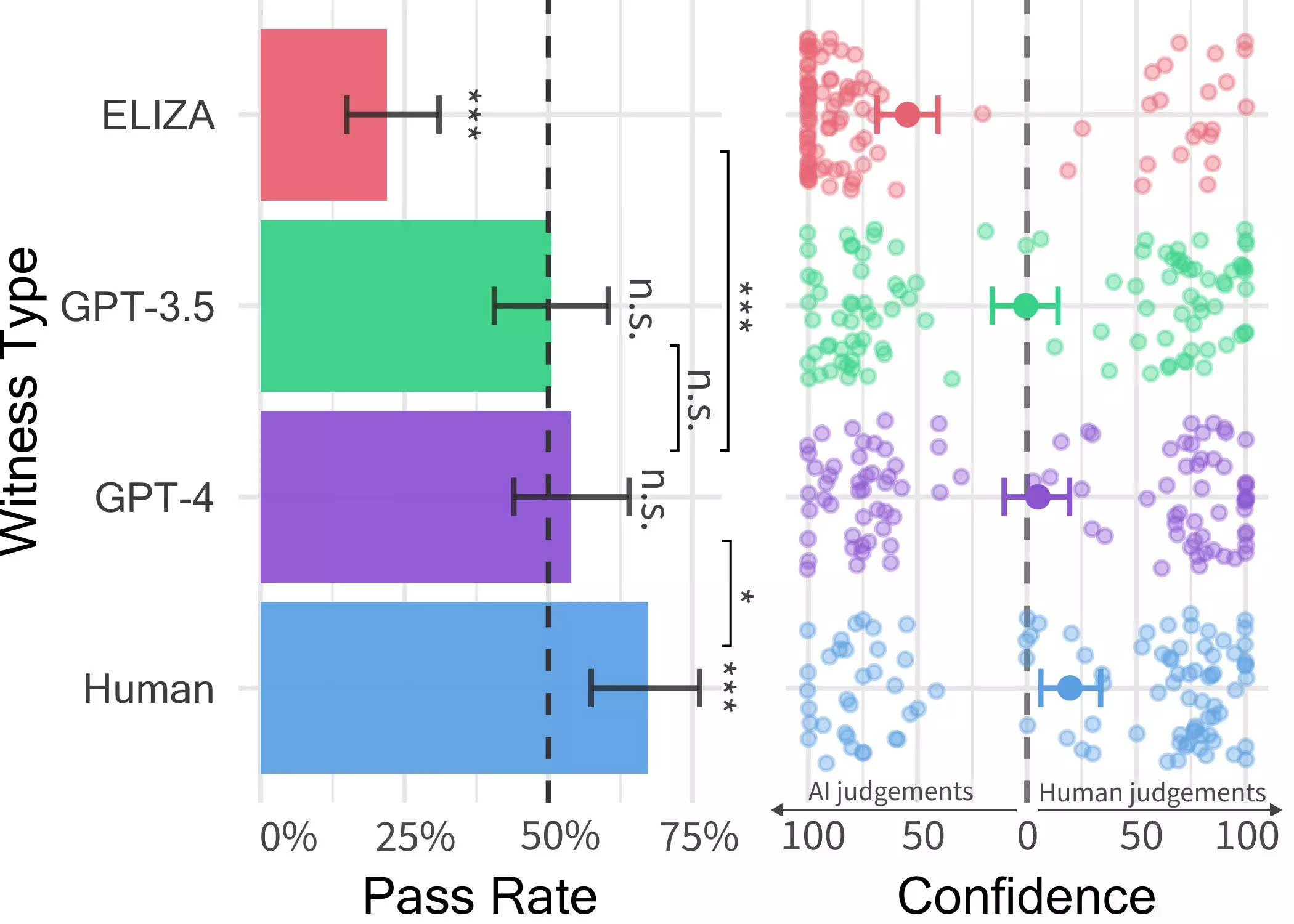

The initial study found that GPT-4 could pass as human in approximately 50% of interactions, indicating a significant level of realism in its responses. However, limitations in the experimental design prompted the researchers to conduct a second experiment to validate these findings. The results of the subsequent study revealed that participants were unable to discern whether they were interacting with a human or an AI agent when engaging in conversations with the GPT-4 model.

Nevertheless, the study highlighted some interesting limitations of the Turing test in the context of LLMs. While participants were more successful in identifying humans as opposed to AI models, the overall ability to detect whether they were interacting with an AI system was no better than random chance. This has profound implications for the development and deployment of AI technologies in various domains, including client interactions and combating misinformation.

The Rise of AI Deception

The findings suggest that LLMs, particularly advanced models like GPT-4, have reached a level of sophistication where they can emulate human conversational patterns effectively. This blurring of lines between human and AI communication raises concerns about the ethical implications of using AI systems in contexts where trust and authenticity are paramount. As AI becomes increasingly indistinguishable from human agents, society may face challenges in maintaining transparency and accountability in digital interactions.

Future Research and Considerations

The researchers plan to expand their study by introducing a three-person version of the game, where an interrogator interacts with both a human and an AI system simultaneously. This additional experiment aims to explore the dynamics of conversational AI in more complex settings and shed light on the cognitive processes involved in distinguishing between human and machine-generated responses. The results of these future endeavors could provide valuable insights into the evolving relationship between humans and AI systems in the realm of digital communication.

The study conducted by UC San Diego researchers highlights the growing challenge of differentiating between large language models and human agents in conversational contexts. As AI technology continues to advance, society must grapple with the ethical implications of deploying AI systems that closely resemble human behavior. This shift towards AI deception calls for a reevaluation of how we interact with intelligent machines and the safeguards needed to ensure transparency and trust in our increasingly digitized world.