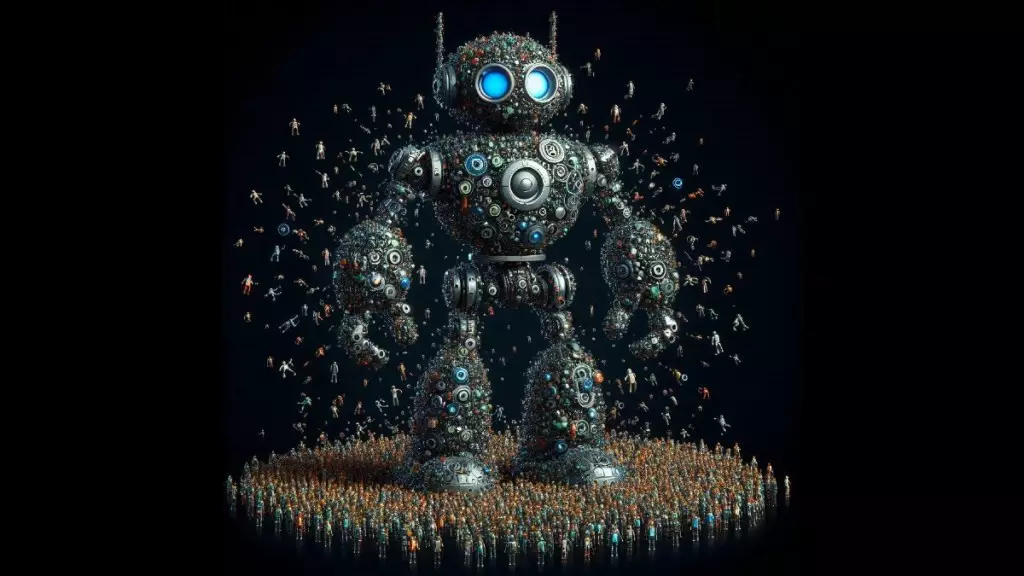

The concept of Mixture-of-Experts (MoE) has gained significant traction in the world of large language models (LLMs) as a means of scaling without incurring exponentially high computational costs. Unlike traditional models that utilize the full capacity for every input, MoE architectures direct data to specialized “expert” modules, allowing LLMs to expand their parameters while keeping inference costs manageable. MoE has been integrated into various popular LLMs like Mixtral, DBRX, Grok, and reportedly GPT-4.

Despite its advantages, current MoE techniques face constraints that confine them to a relatively small number of experts. This limitation hampers the scalability of MoE models, restricting them from reaching their full potential in terms of performance and efficiency.

Google DeepMind has introduced a groundbreaking architecture called Parameter Efficient Expert Retrieval (PEER) in a new paper. This innovative framework enables MoE models to scale up to millions of experts, significantly enhancing the performance-compute tradeoff of large language models.

The Challenge of Scaling Language Models

In recent years, the trend of scaling language models by increasing their parameter count has shown promising results in terms of performance enhancement and capability expansion. However, there exists a threshold beyond which scaling becomes challenging due to computational and memory bottlenecks.

The Role of Feedforward (FFW) Layers in Transformers

Within every transformer block used in LLMs, attention layers and feedforward (FFW) layers play a crucial role. While the attention layer computes relationships between input tokens, the FFW layer stores the model’s knowledge. FFW layers constitute a significant portion of the model’s parameters, posing a bottleneck to scaling transformers due to their direct computational footprint proportional to size.

Addressing the Bottleneck with MoE

Mixture-of-Experts (MoE) emerges as a solution to alleviate the challenges posed by FFW layers in scaling transformers. By replacing the dense FFW layer with sparsely activated expert modules, MoE enables the model to increase capacity without escalating computational costs. Each expert specializes in specific domains, and a router assigns inputs to relevant experts, optimizing accuracy.

Optimizing MoE Models

Research indicates that the optimal number of experts in an MoE model depends on variables such as training tokens and compute budget. When balanced effectively, MoE models have consistently outperformed dense models with the same resources. Increasing the granularity of MoE models, i.e., the number of experts, can lead to notable performance improvements and efficient knowledge acquisition.

DeepMind’s Parameter Efficient Expert Retrieval (PEER) architecture overcomes the limitations of traditional MoE approaches by introducing a learned index for efficient expert routing. PEER’s unique design enables the handling of a vast pool of experts without compromising speed. By utilizing tiny experts with shared neurons, PEER enhances knowledge transfer and parameter efficiency, revolutionizing the scalability of MoE models.