The field of robotics has made significant advancements in recent years, particularly in the area of imitation learning. This method shows promise in teaching robots how to perform everyday tasks, such as cooking or washing dishes, with a high level of reliability. However, a key challenge in imitation learning frameworks is the need for detailed human demonstrations to train robotic systems effectively. These demonstrations must capture the specific movements required for robots to replicate tasks accurately.

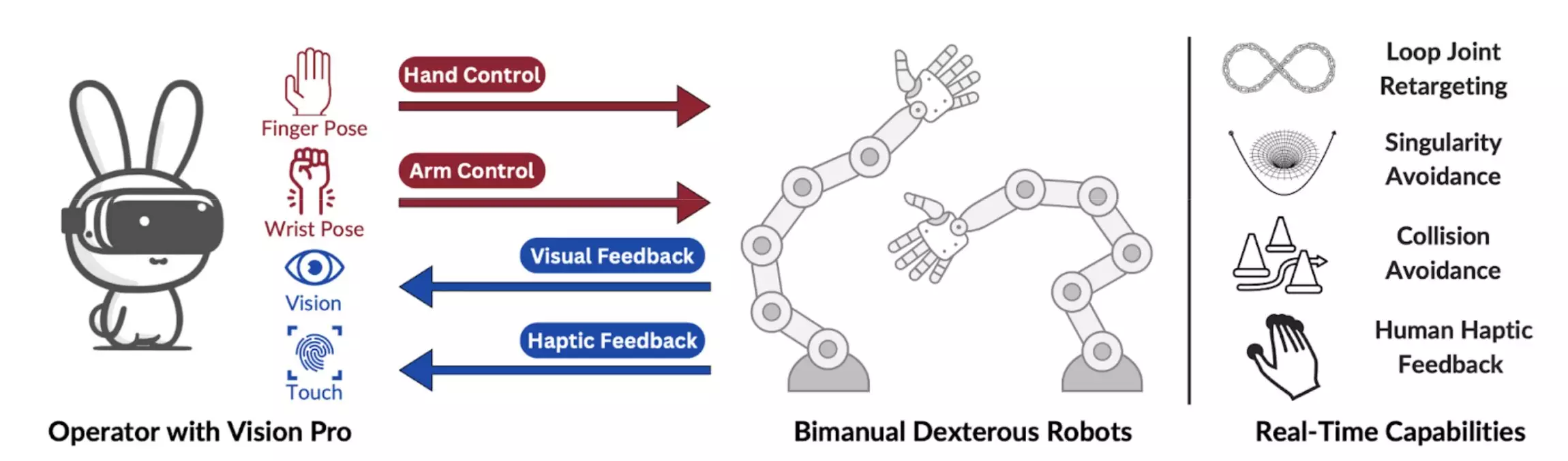

Researchers at the University of California, San Diego have developed an innovative system called Bunny-VisionPro to address the limitations of existing teleoperation systems in capturing complex and coordinated movements. This system enables the teleoperation of robotic manipulators to complete bimanual dexterous manipulation tasks with ease. Unlike traditional teleoperation systems, Bunny-VisionPro focuses on dual-hand control, essential for tasks that demand precise hand coordination.

The primary goal of Bunny-VisionPro is to create a universal teleoperation system that can adapt across different robots and tasks, simplifying the process of collecting human demonstrations for training robotic control algorithms. By making teleoperation and demonstration data collection as intuitive and immersive as playing a VR game, Bunny-VisionPro enhances the quality of demonstrations essential for imitation learning.

Bunny-VisionPro comprises three key components: an arm motion control module, a hand and motion retargeting module, and a haptic feedback module. The arm motion control module converts human wrist poses into robot end-effector poses, effectively managing singularity and collision issues. The hand and motion retargeting module maps human hand poses to robot hand poses, including complex loop-joint structures. Lastly, the haptic feedback module provides users with tactile sensing feedback for a more immersive experience.

One of the standout features of Bunny-VisionPro is its ability to ensure the safe control of bimanual robotic systems in real-time. Unlike previous solutions, Bunny-VisionPro integrates haptic and visual feedback, enhancing the user’s experience and ultimately improving teleoperation success rates. The system’s capacity to balance safety and performance makes it an ideal choice for real-world robotic applications that demand reliability and precision.

The groundbreaking work by Xiaolong Wang and his team paves the way for a more streamlined approach to collecting demonstrations for imitation learning frameworks. Bunny-VisionPro’s potential impact extends beyond research labs, with the possibility of deployment in robotics facilities worldwide. As researchers strive to enhance manipulation capabilities through leveraging tactile information, Bunny-VisionPro serves as a milestone in the evolution of immersive teleoperation systems.