As the competition in the artificial intelligence sector heats up, the Chinese startup DeepSeek has made a significant mark with its latest ultra-large language model, DeepSeek-V3. Renowned for its ability to rival established AI Giants, the company has once again demonstrated its commitment to advancing open-source technology. This development signifies not just a technological leap, but also emphasizes the shifting dynamics in the AI landscape, creating new opportunities for innovation and accessibility.

DeepSeek-V3 stands out, boasting a remarkable 671 billion parameters, which has been implemented through a sophisticated mixture-of-experts (MoE) architecture. This architecture allows the model to activate only a subset of its parameters for each task, ensuring efficient processing while maintaining high accuracy. Such a strategy not only minimizes computational overhead but also positions DeepSeek-V3 as a formidable contender within a competitive market dominated by AI titans like OpenAI and Anthropic.

According to DeepSeek’s benchmarking data, V3 has outperformed its key open-source rivals, effortlessly trumping established models like Meta’s Llama 3.1-405B, while also managing to closely approach the performance metrics of proprietary models. This presents a paradigm shift; with each technological evolution, DeepSeek narrows the gap that has historically existed between open-source and closed-source models. Such advancements raise an intriguing question: can open-source models eventually match, or even surpass, their proprietary counterparts in capabilities and versatility?

DeepSeek is not just resting on its laurels. The company has introduced two groundbreaking features with its latest model. The first is an auxiliary loss-free load-balancing strategy that enhances efficiency. This feature allows the model to optimize the нагрузки across different experts dynamically, avoiding bottlenecks without hampering overall performance. By ensuring that computational resources are judiciously allocated, DeepSeek-V3 can respond to varying workloads with agility.

Secondly, the introduction of multi-token prediction (MTP) enables the model to forecast multiple future tokens in a single pass. This not only bolsters training efficiency but accelerates text generation speed, allowing the model to produce up to 60 tokens per second. Such advancements are crucial as they cater to an industry hungry for quicker and more effective AI solutions.

A highlight that stands out about DeepSeek-V3’s development is its cost efficiency. The entire training process, utilizing cutting-edge hardware and algorithms, was completed with approximately 2,788,000 hours on H800 GPUs—totaling an estimated cost of $5.57 million. This figure starkly contrasts with the hundreds of millions often spent on the development of large language models. Industry competitor Llama-3.1 reportedly invests over $500 million in their training phase alone. DeepSeek’s approach not only reflects a financially prudent strategy but also suggests a new model of sustainability in AI development.

Furthermore, the training was bolstered by utilizing advanced techniques, including mixed precision training and pipeline optimizations, cutting down both time and costs without sacrificing performance.

In extensive testing, DeepSeek-V3 not only yielded impressive results on standardized benchmarks but also excelled in areas that traditionally see heavy competition. Specifically, it showcased extraordinary capabilities on Chinese language tasks and mathematical evaluations, outshining its competitors. In the Math-500 test, for instance, it garnered scores that placed it leagues ahead of its nearest adversary. The model’s prowess is underscored by its balanced performance across diverse contexts, signaling its potential as a ubiquitous tool across various applications.

However, it is important to note that it isn’t invincible; challenges arise from entities such as Anthropic’s Claude 3.5 Sonnet, which has managed to outperform DeepSeek-V3 on several specific benchmarks. This ongoing dynamic characterizes the current state of AI development—heavy competition that creates a cycle of continuous improvement.

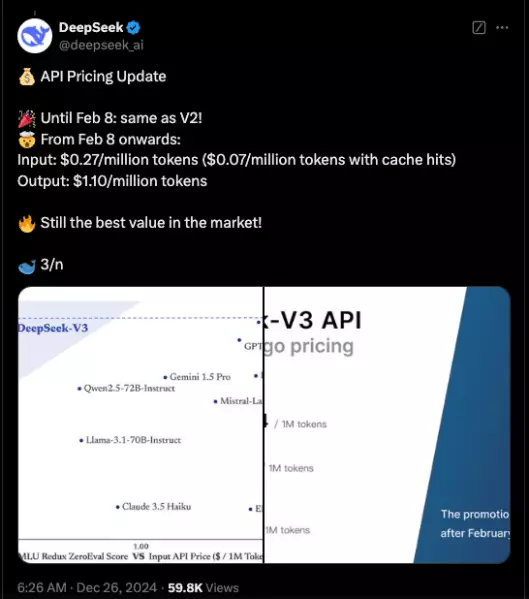

DeepSeek’s commitment to democratizing technology is evident in its open-source ethos. The model’s code is accessible via GitHub under the MIT license, making it more widely available for developers and businesses. Additionally, enterprises have the option to leverage the brand new DeepSeek Chat platform, facilitating commercial use through a competitive pricing model.

This strategic move not only amplifies accessibility but stimulates innovation, giving enterprises the power to select from a broader array of tools to enhance their operations. The implications are manifold; as AI systems become more sophisticated and accessible, the landscape becomes less about a single player dominating the market and more about collective advancement.

DeepSeek-V3’s release illustrates a significant turning point in open-source AI development. By successfully challenging established closed-source models and democratizing access to cutting-edge technology, DeepSeek not only paves the path toward a healthier AI ecosystem but also fuels the ongoing discourse surrounding the pursuit of artificial general intelligence (AGI). The journey of AI development is at a crucial juncture, and DeepSeek’s contributions promise to reshape the future.