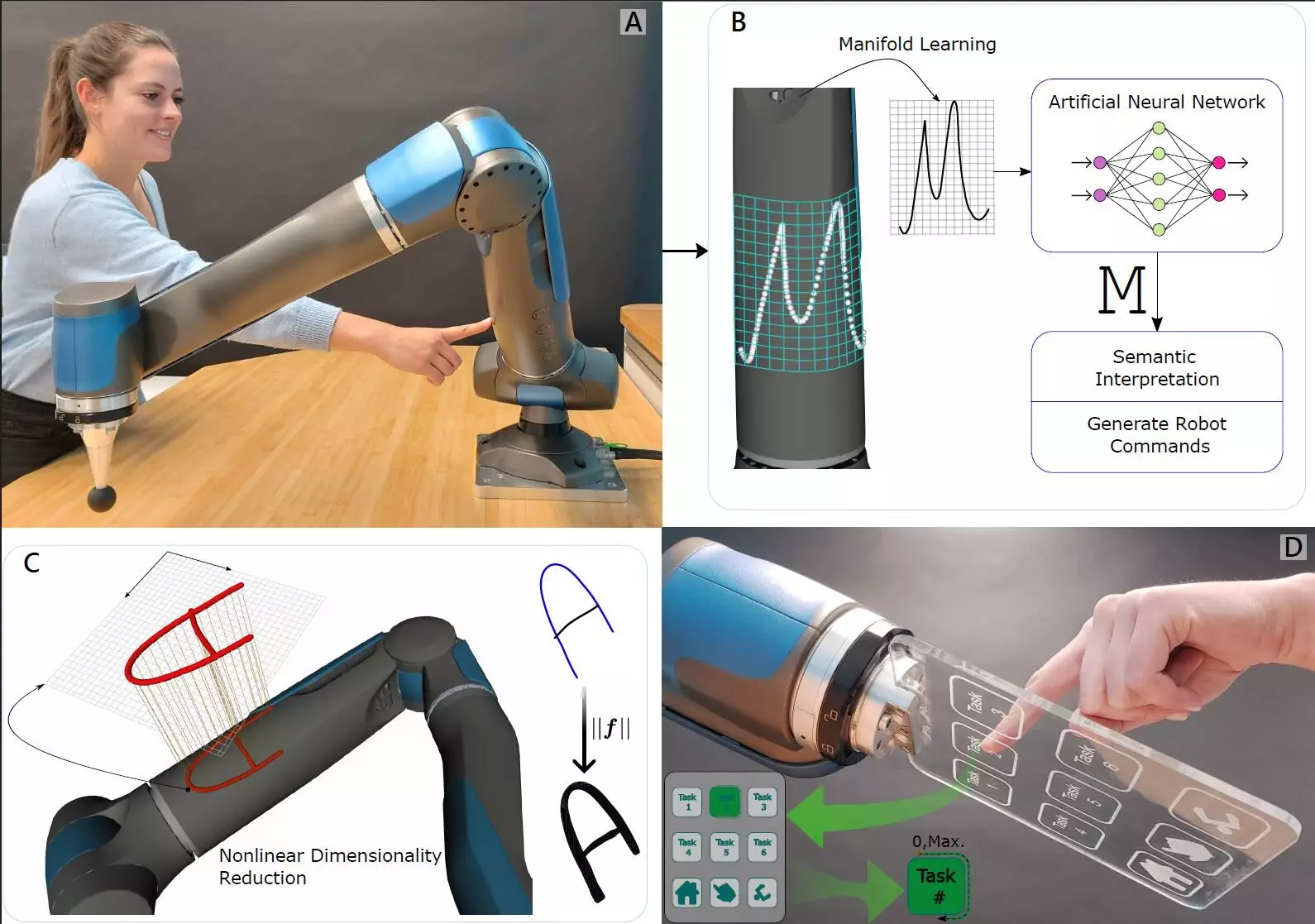

The intersection of robotics and machine learning has burgeoned into one of the most exciting frontiers of modern technology. A recent study conducted by a dedicated team of roboticists from the German Aerospace Center sheds light on this enthusiasm by proposing an innovative approach to artificial touch in robots. Rather than adopting conventional methods relying on advanced synthetic skins, their novel solution integrates internal force-torque sensors with state-of-the-art machine-learning algorithms. This combination not only bridges a significant gap in robotic sensitivity but also sets the groundwork for more profound human-robot interaction.

The Essence of Touch: A Two-Way Experience

Touch is a sensory experience composed of myriad subtleties: it encompasses texture, temperature, pressure, and emotional context. Unlike traditional robotic sensing, which often mimics static touch perception, this research emphasizes the dual nature of touch. For example, when we touch an object, we not only perceive its characteristics but also sense the pushback of the object itself against our skin. By replicating this form of feedback through torque sensitivity within robotic components, the researchers provide robots with the capacity to understand and respond to external interactions in a more human-like manner.

Internal Sensors as a Mechanism of Awareness

Central to the team’s findings is their implementation of highly sensitive force-torque sensors strategically placed within the joints of robotic arms. These sensors are remarkably adept at measuring multi-directional pressure, enabling robots to detect varying levels of force experienced at different points on the arm. This nuanced feedback mechanism serves as a foundation, allowing the robot to recognize specific touch events, such as if someone is pressing a finger against it. The intuitive nature of this sensing can greatly enhance the interactions robots have with their human counterparts in various settings, especially in collaborative industrial environments.

Artificial Intelligence as a Learning Companion

Harnessing the power of machine learning, the researchers equipped the robots with an algorithm capable of interpreting the data collected from the force-torque sensors. This algorithm essentially acts as a learning companion, allowing the robots to adaptively understand different touch scenarios. In practical terms, this means a robot can differentiate between being lightly patted versus subjected to a firm grip, equipping it with the ability to respond more appropriately in real-time. Such sophisticated differentiation is an essential leap forward, as nuanced touch can provide cues that affect how robots interact within their environments and with human users.

Transforming Industrial Robotics

The implications of this research extend far beyond the laboratory. As robots increasingly become integrated into everyday industries, their ability to sense and interpret human touch will redefine human-robot collaboration. For instance, manufacturing environments might see robots seamlessly assisting human workers, identifying when help is needed or adjusting their actions based on the tactile feedback from their colleagues. This technological evolution represents a paradigm shift in robotics, positioning them not just as tools but as collaborative partners in the workforce. The future of industrial robotics is bright with the promise of enhanced sensitivity, fundamentally transforming how we approach automation and collaboration amidst humans.