Recent advancements in artificial intelligence, particularly in the realm of large language models (LLMs) such as GPT-4, have revolutionized how we perceive and interact with machine-generated text. Researchers have discovered a fascinating phenomenon related to these models: they excel at predicting the next word in a sentence but struggle when predicting previous words, an effect termed the “Arrow of Time.” This atypical bias could not only reshape our understanding of language processing in AI but also open new avenues for both AI development and our understanding of cognitive processes.

At the core of LLMs lies a deceptively simple yet remarkably effective mechanism—word prediction. These models analyze the sequence of words that precede a given word, using statistical patterns derived from vast datasets to forecast what comes next. Whether it’s translating text, generating prose, or powering chatbots, the forward orientation of LLMs becomes apparent in their design and application. However, the question of backward prediction—foreseeing what word precedes a known word—presents a different challenge, one that has yet to be thoroughly explored until now.

A team of researchers, led by Professor Clément Hongler from EPFL and Jérémy Wenger from Goldsmiths, London, investigated the capabilities of various LLM architectures, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) networks. Their findings revealed a consistent and significant performance drop when models attempted to predict words in reverse order. Every tested LLM displayed what researchers termed the “Arrow of Time” effect, a bias revealing a deeper asymmetry in language processing.

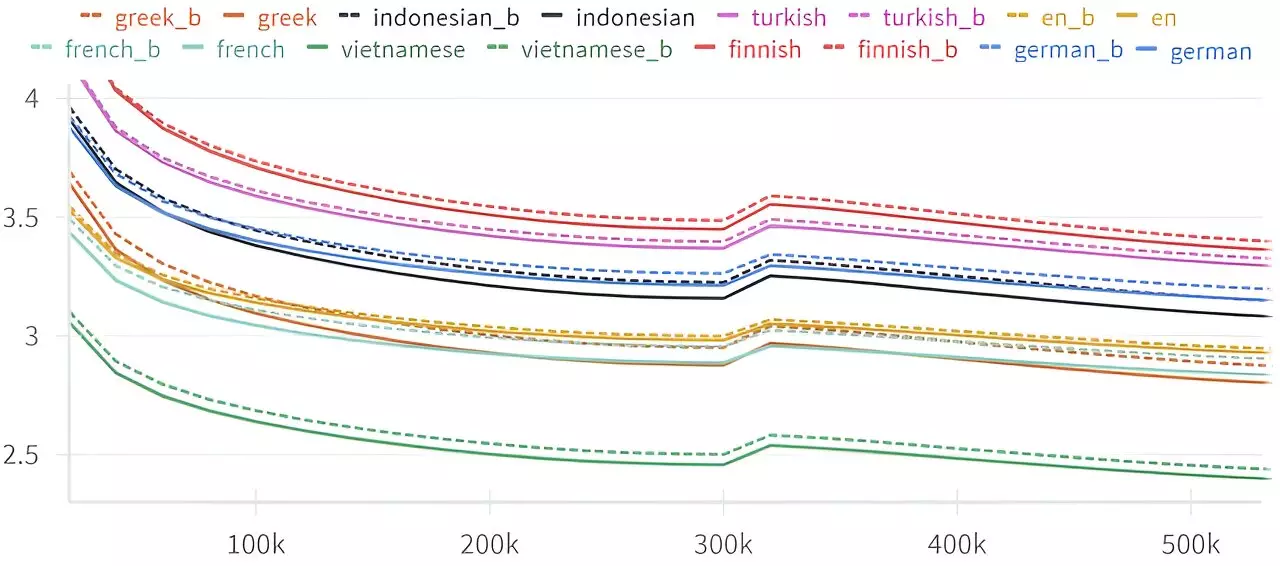

This research primarily demonstrated that while LLMs perform commendably at forward predictions, their backward prediction capabilities are notably weaker. Hongler notes that this trend is consistent across various languages and model types, suggesting a universal characteristic inherent to the structure of how LLMs engage with language. The performance gap, albeit subtle, raises intriguing questions about the mechanisms driving this disparity and the implications for future AI research.

The implications of this research echo ideas put forth by Claude Shannon, the founding figure of Information Theory. In his groundbreaking work from 1951, Shannon pondered whether predicting the sequence of characters in either direction bore any unique challenges. Though both tasks appear equally formidable theoretically, the researchers noted that human beings faced more obstacles when engaged in backward prediction. This connection highlights a fascinating intersection between computational linguistics and cognitive science.

Hongler emphasizes this point: “The discovery that LLMs are sensitive to the directionality of time in language processing suggests a deeper structural property of language itself.” The capacity to assess language through the lens of time and causality helps demystify the complexities that govern communication and narrative construction.

This research not only provides insight into the mechanics of language modeling but also hints at practical applications. Understanding the Arrow of Time bias could aid in developing AI that simulates a more natural back-and-forth conversation, particularly in applications concerning storytelling or improv—a connection that Hongler and his team made early on when collaborating with a theater school to train a chatbot for improvisational narratives.

The notion that intelligent agents may utilize these predictive biases offers exciting avenues for research into detecting intelligence or life-like qualities within AI systems. Additionally, this study invites further exploration into the relationship between time and language, which may illuminate our understanding of causality and the emergent phenomenon of time in physics.

The intricate relationship between language processing and the Arrow of Time has profound implications not just for artificial intelligence, but for our broader understanding of communication, cognition, and even the nature of time itself. As researchers delve deeper into the operational dynamics of LLMs, we stand on the brink of discovering new principles that may redefine our approach to both machine learning and the fundamental nature of intelligence.

These revelations underscore the excitement that emerges within experimentation, where even a simple observation—a chatbot generating backward narratives—can yield insights with far-reaching implications. The study’s findings are not just a testament to the capabilities of AI; they challenge our conception of language and the cognitive processes that guide our understanding of both time and communication.