In today’s digital ecosystem, the proliferation of advanced artificial intelligence (AI) technologies has given rise to an alarming trend: the ability to easily fabricate realistic images, audio, and video. This capability poses substantial risks, particularly in the realms of fraud and misinformation. Recently, AI or Not—a startup dedicated to combating AI-generated deception—secured $5 million in seed funding to enhance its offerings. As the prevalence of deepfakes rises, financial professionals are increasingly viewing these technological threats as existential challenges, prompting the urgent need for robust detection solutions.

The statistics are staggering: an overwhelming 85% of corporate finance experts perceive AI-related scams as severe threats, with over half reporting direct impacts from deepfake technologies. Economic forecasts suggest that generative AI scams could lead to losses exceeding $40 billion in the U.S. alone within the next two years. The imperative for companies like AI or Not to address these vulnerabilities is clear, and their growth trajectory has been fueled by the need for innovative tools that can keep pace with the evolving threat landscape.

A New Wave in Fraud Detection

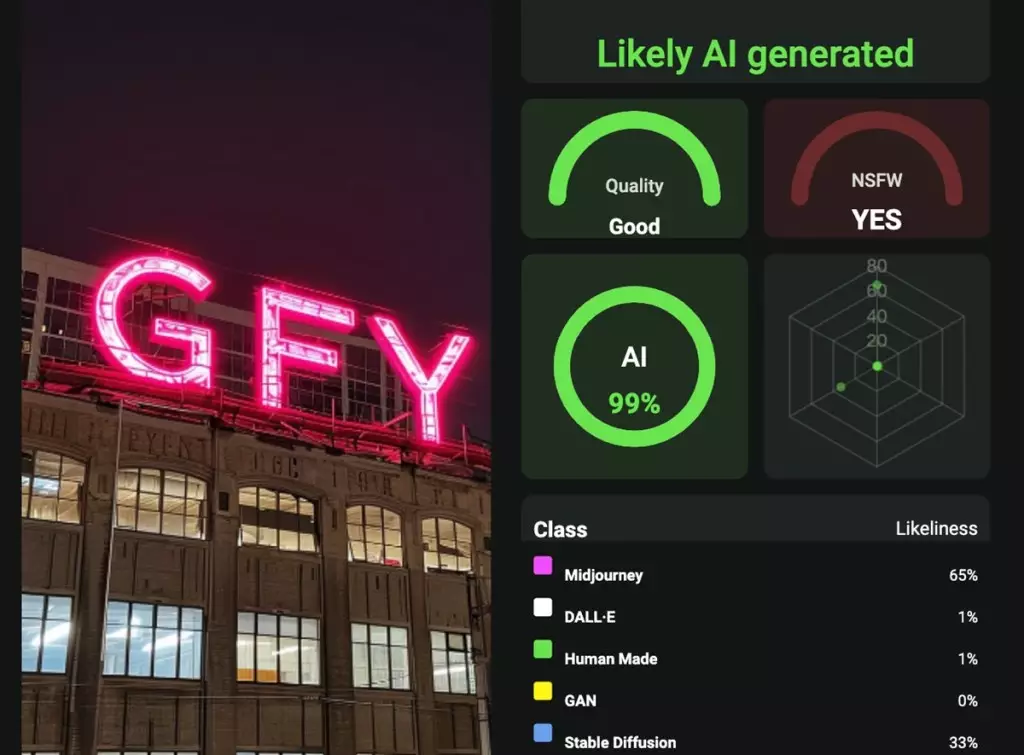

AI or Not’s approach centers around harnessing proprietary algorithms designed to identify and authenticate content across various media. Their technology can effectively expose AI-generated deepfakes that impersonate individuals—ranging from public figures like politicians to everyday citizens. The audacity of these technological feats is underscored by the urgent calls for greater transparency and authenticity in content creation, a sentiment echoed by both consumers and regulatory pillars alike.

Zach Noorani, a partner at Foundation Capital and an investor in AI or Not, highlighted the emerging crisis faced by organizations in verifying the authenticity of information consumed through digital platforms. The surge of generative AI has rendered traditional methods of content verification inadequate, necessitating the development of new frameworks to combat these sophisticated imposters. Noorani’s remarks indicate a growing recognition among stakeholders of the potential dangers and the necessity of empowering users with tools that can counter misinformation effectively.

The recent funding acquisition will bolster AI or Not’s mission, equipping them with the resources needed to enhance their detection methods further. As remarked by Anatoly Kvitnisky, CEO of AI or Not, the duality of generative AI—unlocking potential while simultaneously opening doors for misuse—cements the importance of developing competitive and efficient detection tools capable of safeguarding digital environments.

AI or Not operates in an arena where the stakes are high, directly impacting individuals and organizations alike. Their platform promises to deliver real-time detection capabilities designed to thwart misinformation and fraud before they can inflict harm. This proactive engagement with challenges posed by deepfakes and other AI misuses not only reflects a commitment to innovation but also underscores the ethical obligation tech companies have toward public trust and safety.

As AI technology continues to evolve, the landscape of misinformation will likely become even more complex. Companies like AI or Not are at the forefront of this battle, pushing to develop more sophisticated measures in a race against increasingly advanced models of deception. When reflecting on the potential for abuse within generative AI, it becomes evident that effective tools must not only exist but must also be robust enough to handle a future with even deeper layers of digital manipulation.

Moreover, as individuals and organizations grapple with these dynamics, the demand for authenticity in digital content is likely to only intensify. The backlash against tech giants over issues of transparency has demonstrated a clear public demand for authentic engagement. AI or Not’s innovative solutions could play a crucial role in fulfilling this demand, thereby restoring trust amid widespread skepticism.

AI or Not isn’t merely working on advancing technology; they are creating a response mechanism to a growing crisis of credibility that could redefine interactions in the digital age. Their growth trajectory and strategic funding reflect a broader shift toward proactive measures in safeguarding authenticity, offering a glimmer of hope in an era increasingly overshadowed by doubt. As they continue their mission, the implications for fraud prevention and digital integrity bear watching, representing a crucial intersection between technology and ethics.